[Operating System] The Dining Philosophers Problem

Last week we are introduced to some concepts of synchronization in operating systems. The main challenge is to ensure data integrity when multiple threads/tasks are running concurrently.

There are several classic situations in regard of this endeavor. Among them one particular question caught my attention. It is the dining philosophers problem.

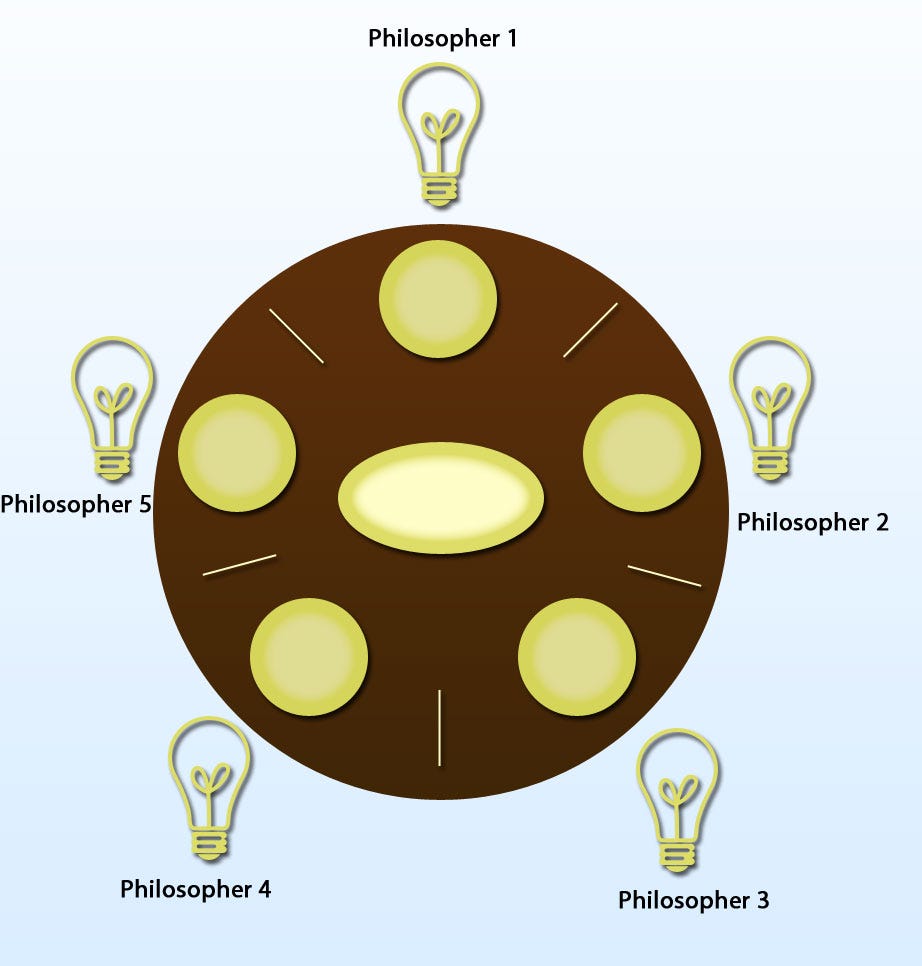

The Dining Philosophers Scenario

- Five philosophers are sitting at a rounded dining table.

- There is one chopstick between each pair of adjacent philosophers.

- Philosophers are either thinking or eating.

- Whenever a philosopher wishes to eat, she first needs to find two chopsticks.

- If the hungry philosopher does not have two chopsticks (i.e. one or two of her neighbors already picked up the chopstick) she will have to wait until both chopsticks are available.

- When a philosopher finishes eating, she puts down both chopsticks to their original places, and resumes thinking.

- There is an infinite amount of food on their plate, so they only need to worry about the chopsticks.

There are a few conditions:

- Philosophers are either thinking or eating. They do not talk to each other.

- Philosophers can only fetch chopsticks placed between them and their neighbors.

- Philosophers cannot take their neighbors’ chopsticks away while they are eating.

- Hopefully no philosophers should starve to death (i.e. wait over a certain amount of time before she acquires both chopsticks).

The Real Situation

As we probably have guessed, this is not just a problem of possible homicide of philosophers. The failures these philosophers may experience are the difficulties that arise in real computer programming when multiple programs need exclusive access to shared resources. It is about how to design a discipline of behavior-or more specifically, a concurrent algorithm-with the following three situation to avoid:

- deadlocks

- resource starvation (livelock)

- Data Corruption

Deadlocks

In an operating system, a deadlock occurs when a process or thread enters a waiting state because a requested system resource is held by another waiting process, which in turn is waiting for another resource held by another waiting process.

Basically, when a deadlock occurs, the program halts because no progress is available anymore. Think about what would happen if we instruct the philosophers as such (when they stops thinking and wishes to eat):

- If both adjacent chopsticks are available, pick them up and eat (for sure).

- If there is only one adjacent chopstick available at the time, pick up that chopstick while waiting for the other chopstick to become available.

- If there are no chopsticks available, wait until any becomes available.

This is a simple and intuitive solution, but also a dangerous one. When all five philosophers pick up their left chopstick at the same time, they will wait indefinitely because each is waiting for another to put down their chopsticks.

Resource Starvation (Livelock)

Resource starvation is a problem encountered in concurrent computing where a process is perpetually denied necessary resources to process its work.

The term starving is quite self-explanatory. If we are not careful with our algorithm, the schedule may result in a situation where one philosopher is constantly bypassed for getting both chopsticks.

Finite Bypass is what we want. It means that there exists a limit of bypass counts for any process before being allowed access to the shared resource. If an algorithm is starvation-free, it has finite bypass.

Data Corruption

Data corruption refers to errors in computer data that occur during writing, reading, storage, transmission, or processing, which introduce unintended changes to the original data.

In our case, this is not a big concern. The closest thing to a data corruption here would be a scheduling that allows a philosopher to snatch her neighbors’ chopsticks while they are eating. That leaves the unfortunate neighbors in an undefined state of ‘half-full’ or ‘70% full’, which will cause unexpected results in the system.

Some Solutions

There are some established, brilliantly-designed solutions. We look at three of them: resource hierarchy solution, arbitrator solution, and Chandy/Misra solution.

Resource Hierarchy Solution

Dijkstra (the creator of this classic situation) proposed this solution. The idea is this:

- Each resource (in this case, the chopsticks) is assigned a partial order.

- All resources will be requested in order. That is, if a unit of work (a philosopher) needs chopstick #1 and #2, she needs to acquire chopstick #1 first and then #2.

- The order in which the unit of work releases the resources does not matter. I.e. It does not matter if the philosopher puts down chopstick #1 or #2 first.

This design guarantees deadlock-free. Its logic precludes the situation where all five philosophers pick up their chopsticks simultaneously. If four philosophers have already picked up one chopstick, only the highest-numbered chopstick will remain on the table, so the fifth philosopher cannot pick up any chopstick.

One major limitations to this solution is that it requires the list of resources be completely known in advance. If a unit of work finds out it needs a resource numbered 2 while holding resource #4 and #5, it would have to release the two resources first, acquire #2, and re-acquire #4 and #5. It could cause troubles in efficiency.

Arbitrator Solution

The idea of this solution is to introduce a third party that monitors the usage of resources. In this case, we would properly call it a waiter. The principles are as follows:

- When a unit of work wishes to access a resource, it asks the arbitrator for permission first. That is, when a philosopher wishes to eat, she first asks the waiter.

- The arbitrator gives one permission at a time.

- Only the unit that has the permission can access shared resources.

- Releasing a resource does not need permissions.

There are two major setbacks for this solution. First of all, a new central entity is introduced, which would require additional resources. Also, it results in reduced parallelism: if a philosopher is eating and one of their neighbors requests the chopsticks, all other philosophers must wait until this request has been fulfilled even if their chopsticks are still on the table.

Chandy/Misra Solution

K.Mani Chandy and J.Misra introduced this solution in 1984.

- Chopsticks have two state: clean and dirty. All chopsticks are initialized to dirty.

- The chopsticks are allocated to the philosophers with the lower id in the pair at the first place (no chopsticks on the table).

- When a philosopher wishes to eat, she must obtain the chopsticks from her neighbors. If she does not have the chopsticks, she sends a request message to the neighbor who has them.

- When a philosopher with a chopstick receives a request message:

- If the fork is clean, the philosopher keeps it.

- If the fork is dirty, the philosopher cleans it and sends the fork over.

5. After eating, the philosopher’s chopsticks become dirty. If another philosopher has previously requested one of the chopsticks, the philosopher cleans and sends it.

Some strengths of this Algorithm:

- It allows for arbitrary agents to contend for an arbitrary number of resources.

- It is completely distributed and requires no central authority.

- It allows for a large degree of concurrency.

- It solves the starvation problem: the clean / dirty states act as a way of giving preference to the most ‘starved’ processes.

As amazing as it seems, this design actually violates the rule of ‘philosophers do not talk to each other’, though.

Implementation Options

Mutex Locks

There are a couple of mechanisms that implement locks. Mutex (mutual exclusion) is the fundamental synchronization technique. The idea is simple: whenever a work unit accesses the critical section, it first needs a lock that guarantees no one else at this time is accessing the critical section. When the work unit exits the critical section, it returns the lock for other work units to access.

In the dining philosopher problem, we can implement an algorithm with mutexes that guarantee the philosophers not to be interrupted when they are changing their states (e.g. the process of picking up chopsticks).

Pthread API usage:

#include<pthread.h> // Declare a mutex

pthread_mutex_t mutex; // Initialize the mutex

pthread_mutex_init(&mutex, NULL); // Lock the mutex

pthread_mutex_lock(&mutex); // Critical section goes here

... // Unlock the mutex

pthread_mutex_unlock(&mutex); // Clean up the mutex

pthread_mutex_destroy(&mutex);Semaphores

The usage of semaphores is very similar to using mutex locks. Instead of the binary operation in mutex locks (holding lock <-> releasing lock), semaphores function on the idea of ‘number of resources’. Each semaphore is initialized to a number, indicating how many resources associated with that semaphore are available at that moment. When we use sem_wait() the number associated with that semaphore will decrease by one. When we use sem_post() the number associated with that semaphore will increase by one.

Semaphores and mutex lock are interchangeable if there are only one resource associated with it (which will make it a binary semaphore).

Pthread API usage:

#include <semaphore.h> // Create a semaphore

sem_t mutex; // Initialize the semaphore to its default attributes,

// and the number of resources to 1.

sem_init(&mutex, 0, 1); // Acquire the semaphore

sem_wait(&mutex); // Critical section goes here

... // Releasing the semaphore

sem_post(&mutex);Implementation using Pthread API with C

In addition to the built in mutex locks, I also used condition variable. In case we accidentally forgot what is a condition variable introduced in last week’s note, here is a brief review:

Condition Variable

In short, a condition variable enables a mutex lock to wait for a certain condition to become true before resuming execution. When the execution encounters a condition variable, it will pause and pass over the processor to another (waiting) thread, until someone ‘signals’ that conditional variable to keep going. In other context it may be seen in a monitor. Since Pthread does not provide monitor functionality, we would instead associate condition variables onto the mutex itself (why should it be associated with a mutex lock? to prevent race condition.) Let’s look at the basic usage of condition variables from Pthread API first:

#include<pthread.c> // Note: suppose a pthread mutex lock named 'mutex' has already been created and initialized. // Create a condition variable

pthread_cond_t condition; // Initialize the condition variable to its default attributes

pthread_cond_init(&condition, NULL); // Enter a critical section

pthread_mutex_lock(&mutex); // some work

... // Start to wait for a condition to become true

while (some_condition != true)

pthread_cond_wait(&condition, &mutex); // some more work

... // (in some other process) Issue a signal

pthread_cond_signal(&condition); // Exit a critical section

pthread_mutex_unlock(&mutex); // Clean up the condition variable pthread_cond_destroy(&condition[i]);From the usage example above we learnt that

- Condition variables are used inside a critical section(or else it would not be needed anyway).

- Condition variables are generally used in a while loop, that serves to check if a condition becomes true.

The Implementation

The only thing to note is that pthread_cond_destory would not have any effect if there is no condition variable that is currently blocked. The rest should be quite self-explanatory. Happy coding!

REFERENCES

- https://c-program-example.com/2012/02/c-program-to-solve-dining-philosophers-problem.html

- http://pubs.opengroup.org/onlinepubs/007908799/xsh/pthreadcondwait.html

Originally published at https://codecharms.me.

文章同步發表於 Medium。